Making AI hallucinations a thing of the past

Jan 31, 2025

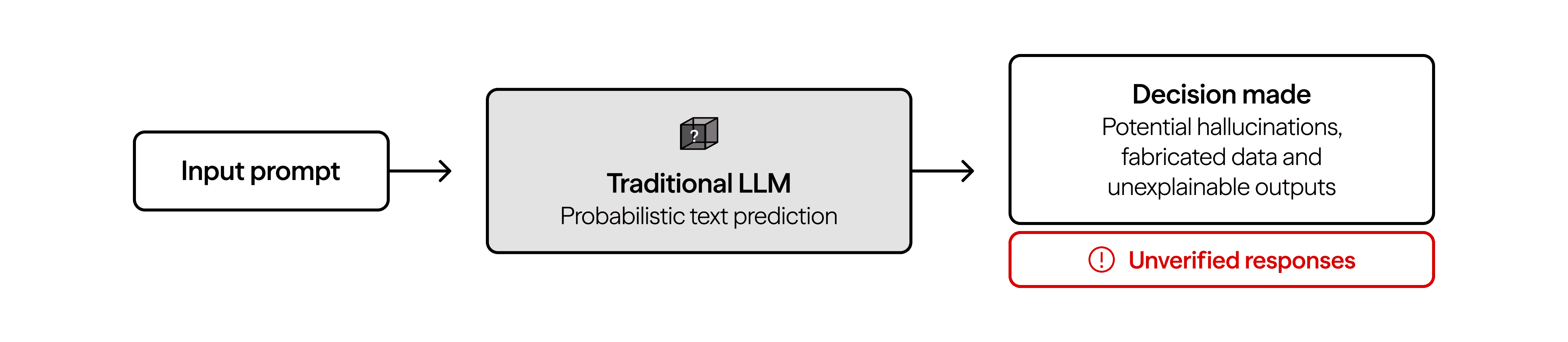

In the rapidly evolving world of artificial intelligence, one of the biggest challenges facing large language models (LLMs) is their tendency to generate misleading or completely fabricated information—commonly known as hallucinations. While LLMs have made remarkable progress, their core mechanism of probabilistic text generation rather than logical reasoning makes hallucinations an unavoidable issue.

Several key factors contribute to this problem:

Statistical Pattern Matching – LLMs generate responses by predicting the most probable next word rather than verifying facts.

Lack of True Understanding – They do not possess real-world knowledge or reasoning abilities; they simply mimic language patterns.

Dependence on Unverified Training Data – These models absorb biases, inaccuracies, and outdated information from their training datasets, making their outputs unreliable.

Overconfidence in Responses – Unlike human experts who acknowledge uncertainty, LLMs often provide answers even when they lack the correct information.

Since hallucinations are a direct result of an LLM's predictive nature, no amount of fine-tuning can completely eliminate them. This poses a significant challenge for companies relying solely on LLMs for mission-critical applications, as accuracy, reliability, and trust become serious concerns.

How UnlikelyAI eliminates hallucinations

At UnlikelyAI, we take a radically different approach by integrating symbolic reasoning with the power of LLMs. Our patented neurosymbolic platform is specifically designed to eliminate hallucinations by grounding AI outputs in structured logic rather than probabilistic text generation.

Universal Language (UL): Structured representations for precise reasoning

Unlike traditional LLMs, which rely on free-text generation, our system converts inputs (text, images, structured databases and graphs) into a structured format called Universal Language (or UL for short). This structured representation ensures every piece of information has a logical foundation before being used in reasoning.

Reasoning Engine (RE): Logical inference over probabilities

Instead of predicting words based on statistical likelihoods, our Reasoning Engine (RE) follows explicit logical steps to derive answers from verifiable sources. Key capabilities include:

Multi-step logical deductions: The RE executes complex reasoning by following structured logical rules.

Non-guessing approach: If sufficient information is unavailable, our system responds with "I don’t know" rather than fabricating an answer.

Consistency across queries: The deterministic nature of RE ensures the same input always results in the same output.

Curated Knowledge Base: Verified and trustworthy information

Rather than relying on vast, unverified internet data like traditional LLMs, our system leverages a curated knowledge base, continuously updated with:

General knowledge (verified scientific and factual databases)

Domain-specific knowledge (customer-provided or industry-specific data)

Explicit logical rules defining relationships between different concepts

This approach minimizes the risk of incorrect or fabricated information, ensuring accuracy and reliability in high-stakes applications.

Auditability and transparency

One of the biggest flaws in traditional LLMs is the black-box nature of their decision-making process. Our approach ensures:

Step-by-step reasoning visibility: Users can trace each decision back to its logical foundation.

No black-box processing: Unlike traditional AI, our neurosymbolic model provides complete transparency in its decision-making process.

Compliance-friendly AI: Because every decision is backed by structured reasoning, our system meets stringent regulatory requirements for AI accountability.

The Future of AI: Reliable, Explainable and Hallucination-free

Traditional LLMs will always struggle with hallucinations due to their probabilistic nature. Unlikely AI is solving this problem at its core by moving beyond pure LLM architectures. Our neurosymbolic platform ensures that AI systems are trustworthy, consistent, and fully explainable—making them ideal for high-stakes industries like finance, healthcare, and legal applications.